Smart Instruments are reshaping the music industry by blending traditional craftsmanship with cutting-edge technology. These intelligent tools respond to a musician’s intent, environment, and style, lifting performance to new heights. From live concerts to studio sessions, they offer dynamic control, seamless connectivity, and personalized feedback. This article explores the key innovations driving this evolution, each revealing how tomorrow’s instruments will empower artists to focus on creativity rather than technical limitations.

| Table of Contents | |

|---|---|

| I. | AI-Driven Real-Time Adaptive Sound |

| II. | Multi-Modal Sensor Integration |

| III. | Networked Low-Latency Connectivity |

| IV. | Cloud-Based Collaborative Platforms |

| V. | Augmented/Virtual Reality Interfaces |

| VI. | On-Board Machine Learning for Style Emulation |

| VII. | Self-Tuning and Auto-Calibration Systems |

| VIII. | Advanced DSP Chipsets |

| IX. | Haptic and Tactile Feedback Mechanisms |

| X. | Data Analytics & Performance Insights |

| XI. | Modular, Upgradable Hardware-Software Ecosystems |

AI-Driven Real-Time Adaptive Sound

By embedding advanced machine-learning models, instruments analyze playing dynamics and acoustics in real time. They then adjust tone, effects, and resonance to match the performer’s style and venue. This adaptive sound processing eliminates manual tweaking of settings between songs or takes, letting artists concentrate on expression. As the system learns from each note and chord progression, it delivers consistent audio quality, ensuring every performance sounds its best with minimal intervention.

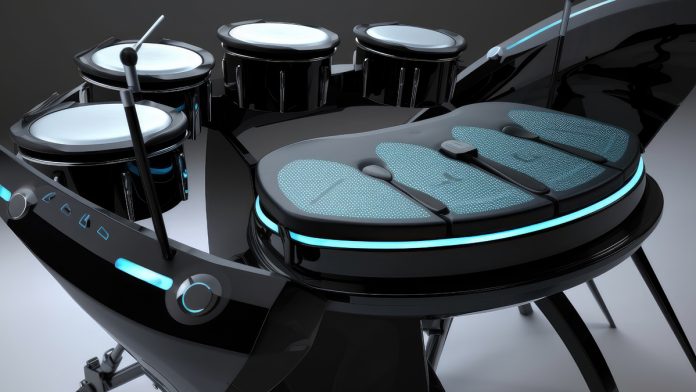

Multi-Modal Sensor Integration

Next-generation instruments combine pressure pads, motion trackers, and touch‑sensitive surfaces to capture nuanced gestures. These sensors detect subtle changes in force, position, and movement, translating them into expressive musical variations. For example, a violin equipped with accelerometers can modulate vibrato depth based on hand motion. By integrating multiple sensor types, the instrument gains a rich data stream, enabling performers to explore new playing techniques and expand their creative vocabulary.

Networked Low-Latency Connectivity

Modern stages demand seamless communication between devices. Instruments with networked low-latency links synchronize audio, MIDI, and control signals across on‑stage gear and back‑of‑house systems. Using protocols like Dante or AVB, they maintain sub-millisecond timing, crucial for tight ensemble playing. This connectivity allows remote collaborators to join rehearsals or performances in real time, breaking geographical barriers. Musicians can share control data, patch changes, and live mixes with sound engineers instantly and reliably.

Cloud-Based Collaborative Platforms

Cloud integration brings collaborative music‑making to a global scale. Artists can upload performance data, presets, and stems, then invite others to contribute, tweak settings, or remix parts. These platforms store version histories, enabling iterative refinement across time zones. Producers can access live tracking information to fine‑tune mixes, while composers share interactive templates. By centralizing creative assets online, musicians foster a fluid workflow where ideas evolve collectively, driving innovation beyond the studio walls.

Augmented/Virtual Reality Interfaces

AR and VR open immersive dimensions for performance and practice. Through headsets or mobile devices, musicians overlay digital interfaces onto real instruments or play entirely virtual ones. Visual cues guide finger placement, chord transitions, and improvisation paths, accelerating learning. In VR concert halls, artists perform before avatars of fans worldwide, interacting in shared virtual spaces. These environments elevate audience engagement and redefine stagecraft, merging the physical and digital realms of music.

On-Board Machine Learning for Style Emulation

Embedded neural networks analyze recordings to learn an artist’s unique tone and phrasing. When activated, this on-board learning lets instruments mimic the sound and feel of famous performers or custom presets. Guitarists, for instance, can switch between emulations of classic amplifiers or pedal chains with a knob turn. This stylistic versatility encourages exploration, enabling musicians to experiment across genres without carrying heavy gear or complex setups.

Self-Tuning and Auto-Calibration Systems

Instruments equipped with precise motor controls and calibration software automatically tune strings or adjust key action. They compensate for temperature changes, string wear, or humidity, ensuring optimal playability. During live sets, automatic re‑tuning between songs saves time and reduces interruptions. Calibration routines also align sensor inputs and audio outputs, maintaining consistency across stages. By handling maintenance tasks autonomously, these systems free performers to focus on artistry rather than technical upkeep.

Advanced DSP Chipsets

Dedicated digital signal processing chips power real‑time effects, synthesis, and dynamic control with minimal power draw. These specialized processors handle complex algorithms, like granular synthesis or adaptive filtering, directly on the instrument’s hardware. These offloads work from external computers, reducing setup complexity and potential latency. Musicians benefit from a compact form factor and reliable performance, whether on tour bus or in the studio, as powerful audio processing remains at their fingertips.

Haptic and Tactile Feedback Mechanisms

By integrating vibration motors and force feedback, instruments convey information directly to the player’s hands. For example, a piano keyboard can subtly pulse to indicate tempo, or a drum pad can simulate stick rebound. These tactile cues assist with timing, dynamics, and technique without diverting the musician’s gaze from other performers. Feedback intensity can adjust based on phrasing or volume, creating a two-way interaction where the instrument speaks back to the artist.

Data Analytics & Performance Insights

Built‑in analytics tools collect metrics on practice habits, technique accuracy, and audience responses. Software dashboards visualize playing speed, note accuracy, and dynamic range over time. Musicians receive personalized reports that highlight strengths and areas to improve. Venue acoustics and crowd noise data can also inform future setlist planning or microphone placement. By leveraging these insights, artists refine their craft and engage audiences more effectively, making each show a step toward mastery.

Modular, Upgradable Hardware-Software Ecosystems

Future-proof design lets users swap out modules, such as sensor arrays, effect boards, or connectivity cards, without replacing the entire instrument. Open architectures support community-driven software plugins and hardware expansions. As algorithms evolve or new sensors emerge, musicians install upgrades to extend functionality. This modularity lowers costs, reduces electronic waste, and fosters an ecosystem where creative tools grow alongside the artist, ensuring instruments remain relevant for years to come.